...

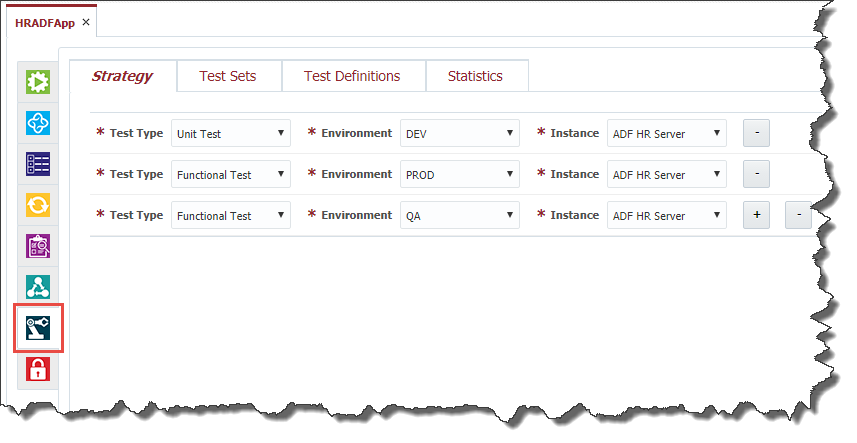

To configure the test to be run for a project click on the Test Automation tab.

Test Automation Strategy

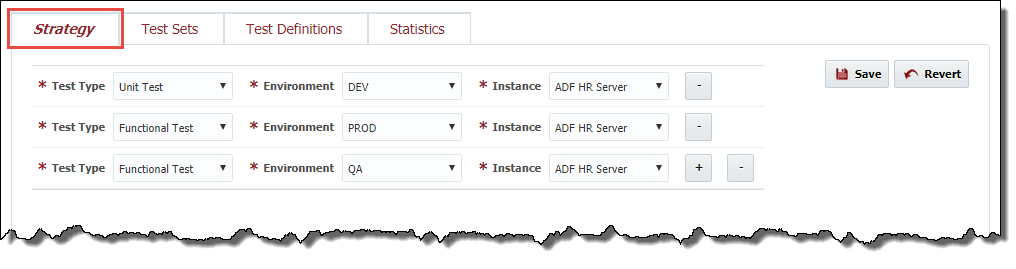

Test automation strategy defines test types that can be performed for a project at each environment and instance where the project is deployed. Usually QA engineers set up different instances of the application for performing different test types. For example, one instance is good enough for functional testing, providing a lot of available functionality, but it may not be suitable for performance testing. There is a separate instance with huge amount of data for performing testing To set up a new combination of the test type, environment and instance click the "+" button and plus button and select values from the drop down lists. To delete a combination click the "-" buttonminus button .

To save test automation strategy for the project click the Save button. To cancel the changes click the Revert button.

...

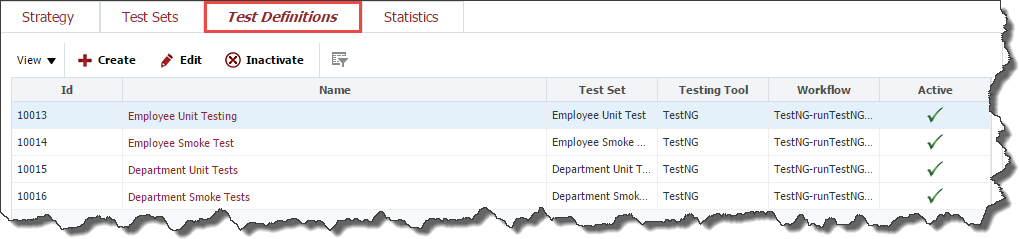

To view the list of test definitions defined for the project, select Test Definitions tab.

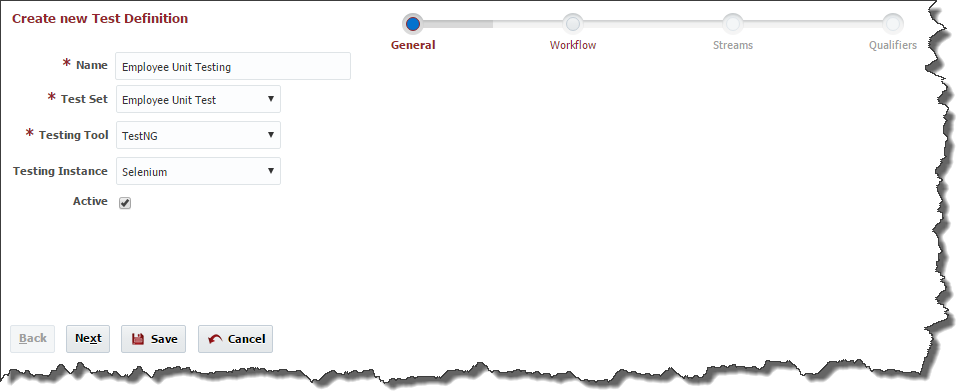

Creating a Test Definition

To create a test definition click the Create button.

At the General step of the wizard enter values for the following fields:

Field Name | Required | Description |

|---|---|---|

Name | Yes | Name for the test definition. |

Test Set | Yes | Select Test Set for which the test definition belongs. |

Testing Tool | Yes | Select Testing Tool which should be used to run tests |

Testing Instance | No | Enter Select Testing Instance where the testing tool is installed. This can be useful for testing tools like OATS, Selenium, |

SoapUI, etc. These tools usually have their own standalone installations. For testing tools like JUnit or TestNG this filed should be empty, which means that the testing tool is installed and running on each environment/instance where the project is deployed. | ||

Active | Yes | A flag indicating whether this test definition is active. Defaults to "Yes". |

Click the Next button in order to specify a workflow for test executions.

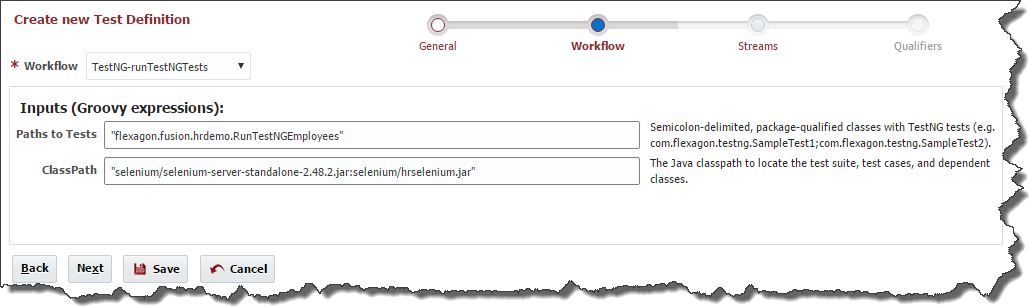

At the Workflow step of the wizard select a workflow which is going to be used to run tests. Note, that only a workflow of Test Definition type can be selected here. Enter values for any inputs that are configured for the workflow.

| Tip |

|---|

You will need to select appropriate workflow for test executions. There are many workflows available out of box for supported test tools, but you can define your own test tool and workflow as well. Depending on workflow you select, you may have a few inputs to configure.

|

Click the Next button in order to configure the Streams for the test definition.

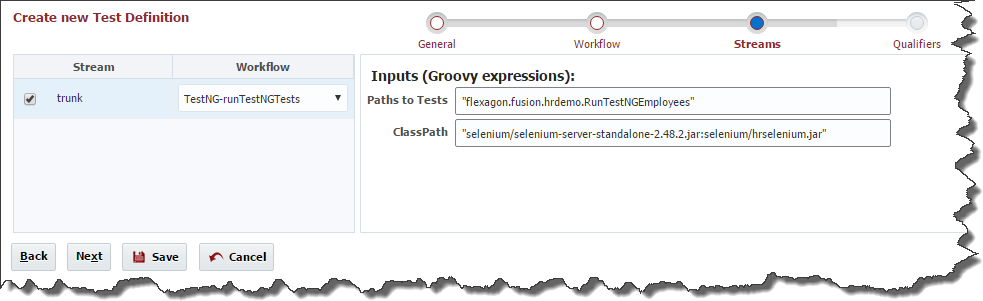

At the Streams step of the wizard select project streams for which the test definition is suitable. By default, all project streams are selected. It is possible to override workflow input values for each stream. It is also possible to change a workflow which is going to be used to run tests for each project stream. By default, all project streams will use the workflow and input values defined at the Workflow step.

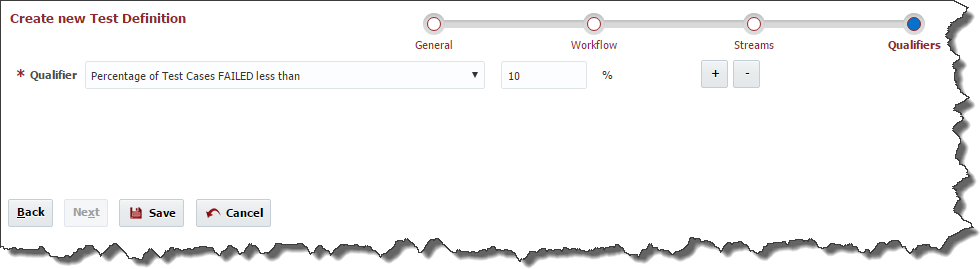

A Test Definition is able to analyze Test Results and come up with the conclusion whether the test run succeeded or failed. This feature is based on Qualifiers. A test definition can contain a number of qualifiers and if all qualifiers, defined at the test definition, return true, then the test definition run is considered successful. If Qualifier is not setup, Test Definition result would be considered as Success.

Click the Next button in order to configure Qualifiers for the test definition.

To add a new qualifier to the test definition click "+" button. Click "-" button in plus button . Click minus button in order to remove a qualifier from the test definition.

FlexDeploy comes out-of-the-box with the following predefined test qualifiers:

Qualifier | Argument | Notes |

|---|---|---|

Number of Test Cases PASSED greater than "X" | Integer value | |

Number of Test Cases FAILED less than "X" | Integer value | |

Percentage of Test Cases PASSED greater than "X" % | Percentage value | |

Percentage of Test Cases FAILED less than "X" % | Percentage value | |

| Average Response Time Less than or equal to | Integer value (milliseconds) | Set value of expected max average response time. This will apply to each test case executed by definition, i.e. average response time must be less than or equal to specified amount. If response time is higher in any test case then test definition will be considered Failed. |

Click the Save button to save the changes to the test definition and return to the list of test definitions defined for the project or click on the Test Definition Name.

...

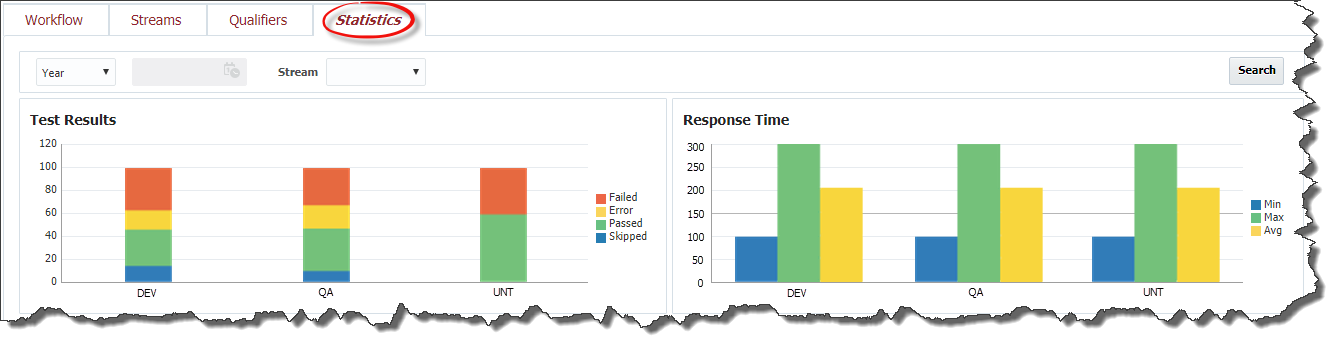

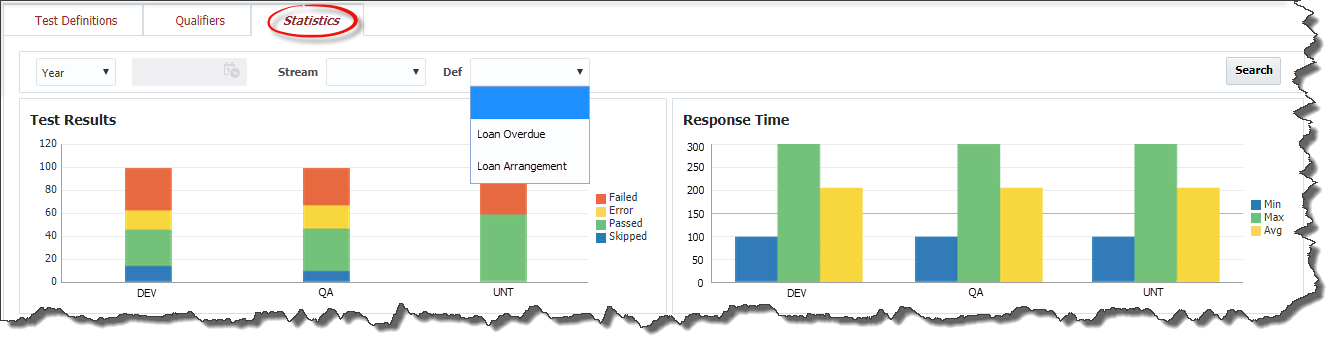

The test definition view screen also contains Statistics tab representing historical information about test definition executions.

There are two charts on the Statistics tab: Test Results and Response Time. The charts represent the same data that is described in the Dashboard section, only related to the Test Definition.

...

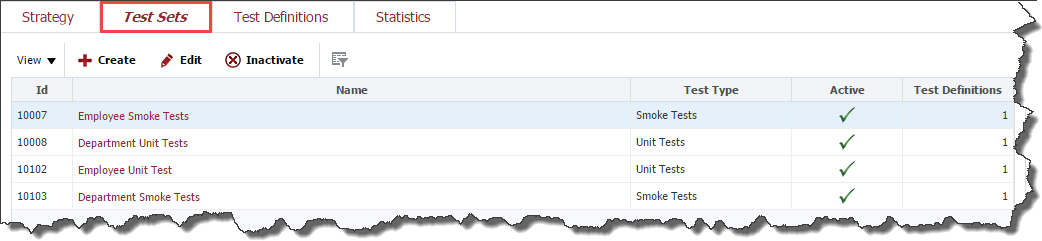

To view the list of test sets defined for the project, navigate to the Test Automation tab, as it is described in the Project Configuration section and select Test Sets tab.

Creating a Test Set

...

Click the Next button in order to configure Qualifiers for the test set. Adding and removing test set qualifiers for the test set is performed in the same way as it is done for the test definitions.

For Test Sets FlexDeploy provides the following preconfigured qualifiers:

...

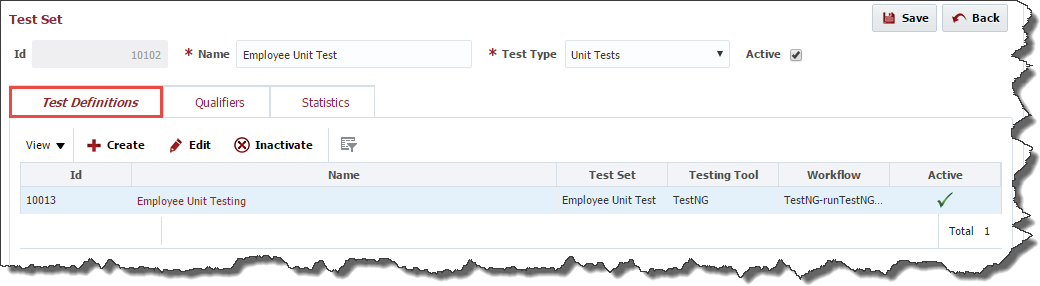

To edit or view a test set, select an existing test set and click the Edit button or click on the Name to use the hyperlink.

The edit screen contains all attributes of the test set and displays Test Definitions, Qualifiers and Statistics data on separate tabs. The Test Definitions tab shows all test definitions related to this test set. The tab allows you to create and edit test definitions in the same way as it is described in the Test Definitions section. If Qualifier is not setup, Test Set result would be considered as Success.

Click the Save button to save the changes to the test set or click the Back button to cancel the changes and return to the list of test sets defined for the project.

...

The test set view screen contains Statistics tab representing historical information about test set executions. The tab contains Test Results and Response Time charts representing the same information that is described in Dashboard section, only related to this test set.